어때요, 요즘 핫한 강화 학습🔥에 관심 있으신가요? 뭔가 복잡해 보이지만, 걱정 마세요! 😎 이 글 하나면 여러분도 강화 학습 환경 구축 전문가가 될 수 있어요! 늦기 전에 어서 시작해봐요! 😉

이 글을 읽으면 뭘 알 수 있나요?

- OpenAI Gym으로 쉽고 재미있게 강화 학습 환경 체험하기!

- TensorFlow Agents로 나만의 강화 학습 에이전트 만들기!

- 강화 학습, 더 깊이 파고드는 방법까지 마스터하기!

강화 학습, 왜 핫할까? 🤔

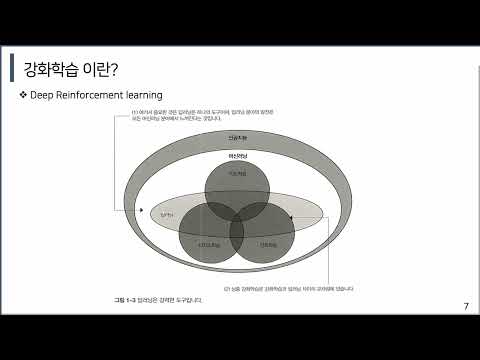

강화 학습은 쉽게 말해 ‘스스로 학습하는 AI’를 만드는 기술이에요. 🤖 마치 강아지 훈련시키듯이, AI에게 잘했을 땐 칭찬해주고, 잘못했을 땐 벌을 주면서 스스로 최적의 행동을 찾아가도록 하는 거죠. 🐶 덕분에 게임🎮, 로봇🤖, 자율 주행🚗 등 다양한 분야에서 혁신을 일으키고 있답니다!

OpenAI Gym: 강화 학습 놀이터 🎠

OpenAI Gym은 강화 학습 알고리즘을 개발하고 테스트할 수 있는 다양한 환경을 제공하는 플랫폼이에요. 복잡한 설정 없이 간단하게 설치하고 사용할 수 있어서, 강화 학습 입문자에게는 최고의 놀이터라고 할 수 있죠! 🎉

OpenAI Gym 설치 & 사용법

- 설치:

pip install gym한 줄이면 끝! 참 쉽죠? 😜 - 환경 선택: Gym에서는 다양한 환경을 제공해요.

CartPole-v1,MountainCar-v0처럼 간단한 환경부터,Atari게임🎮처럼 복잡한 환경까지! 원하는 환경을 골라보세요. - 환경 실행: 선택한 환경을 불러와서 실행해보세요.

import gym

env = gym.make('CartPole-v1') # CartPole 환경 선택

observation = env.reset() # 환경 초기화

for _ in range(100):

action = env.action_space.sample() # 랜덤 액션 선택

observation, reward, done, info = env.step(action) # 액션 실행

env.render() # 화면에 보여주기

if done:

observation = env.reset()

env.close()꿀팁: env.action_space와 env.observation_space를 활용하면, 환경의 액션과 상태 정보를 쉽게 확인할 수 있어요. 🧐

TensorFlow Agents: 나만의 AI 조련사 🧑🏫

TensorFlow Agents는 Google에서 개발한 강화 학습 라이브러리예요. 다양한 강화 학습 알고리즘을 쉽게 구현하고, 학습된 에이전트를 배포할 수 있도록 도와주죠. 💪 TensorFlow Agents를 사용하면, 여러분도 AI 조련사가 될 수 있답니다!

TensorFlow Agents 설치 & 사용법

- 설치:

pip install tf-agents명령어로 간단하게 설치! - 환경 설정: TensorFlow Agents는 TensorFlow 환경에서 동작해요. TensorFlow가 설치되어 있는지 확인해주세요.

- 에이전트 생성: 원하는 강화 학습 알고리즘(DQN, PPO 등)을 선택하고, 에이전트를 생성하세요.

- 학습: 에이전트를 환경과 상호작용시키면서 학습시키세요.

- 평가: 학습된 에이전트의 성능을 평가하고, 필요에 따라 개선하세요.

예시 코드 (DQN 에이전트 학습)

import tensorflow as tf

from tf_agents.agents.dqn import dqn_agent

from tf_agents.environments import suite_gym, tf_py_environment

from tf_agents.networks import q_network

from tf_agents.replay_buffers import tf_uniform_replay_buffer

from tf_agents.trajectories import trajectory

from tf_agents.utils import common

# 1. 환경 설정

env_name = 'CartPole-v1'

train_py_env = suite_gym.load(env_name)

eval_py_env = suite_gym.load(env_name)

train_env = tf_py_environment.TFPyEnvironment(train_py_env)

eval_env = tf_py_environment.TFPyEnvironment(eval_py_env)

# 2. 네트워크 생성

q_net = q_network.QNetwork(

train_env.observation_spec(),

train_env.action_spec(),

fc_layer_params=(100,))

# 3. 에이전트 생성

optimizer = tf.compat.v1.train.AdamOptimizer(learning_rate=1e-3)

train_step_counter = tf.Variable(0)

agent = dqn_agent.DqnAgent(

train_env.time_step_spec(),

train_env.action_spec(),

q_network=q_net,

optimizer=optimizer,

td_errors_loss_fn=common.element_wise_squared_loss,

train_step_counter=train_step_counter)

agent.initialize()

# 4. 리플레이 버퍼 생성

replay_buffer = tf_uniform_replay_buffer.TFUniformReplayBuffer(

data_spec=agent.collect_data_spec,

batch_size=train_env.batch_size,

max_length=10000)

# 5. 데이터 수집

def collect_step(environment, policy, buffer):

time_step = environment.current_time_step()

action_step = policy.action(time_step)

next_time_step = environment.step(action_step.action)

traj = trajectory.from_transition(time_step, action_step, next_time_step)

buffer.add_batch(traj)

# 6. 학습

dataset = replay_buffer.as_dataset(

num_parallel_calls=3,

sample_batch_size=64,

num_steps=2).prefetch(3)

iterator = iter(dataset)

for _ in range(1000):

collect_step(train_env, agent.collect_policy, replay_buffer)

experience, unused_info = next(iterator)

train_loss = agent.train(experience).loss

# 7. 평가 (생략)주의사항: TensorFlow Agents는 TensorFlow 버전에 따라 호환성 문제가 발생할 수 있어요. 😥 설치하기 전에 TensorFlow 버전과 TensorFlow Agents 버전을 확인해주세요. 버전 관리는 필수! ⚠️

강화 학습 환경, 꼼꼼하게 선택하기 🧐

강화 학습 환경은 에이전트의 성능에 큰 영향을 미쳐요. 🧐 따라서 목적에 맞는 환경을 신중하게 선택해야 해요.

| 환경 종류 | 특징 | 예시 |

|---|---|---|

| Classic Control | 간단한 문제 해결에 적합. | CartPole, MountainCar |

| Atari | 복잡한 게임 환경에서 다양한 알고리즘 테스트 가능. | Breakout, Pong |

| Robotics | 로봇 제어, 경로 계획 등 물리적인 환경에서 학습 가능. | FetchReach, Pendulum |

| Custom | 특정 목적에 맞춰 직접 환경을 설계해야 하는 경우. | (예: 자율 주행 시뮬레이션, 주식 거래 환경) |

꿀팁: 처음에는 간단한 환경부터 시작해서, 점차 복잡한 환경으로 난이도를 높여가는 것이 좋아요. 🤓

Custom Environment: 나만의 실험실 만들기 🧪

OpenAI Gym에서 제공하는 환경 외에, 나만의 Custom Environment를 만들 수도 있어요. 특정 연구 목적이나 프로젝트에 필요한 환경을 직접 설계할 수 있다는 장점이 있죠. ✨

Custom Environment 만드는 방법

gym.Env클래스를 상속받아 새로운 클래스를 정의하세요.__init__,step,reset,render,close메소드를 구현하세요.observation_space와action_space를 정의하세요.

예시 코드 (간단한 Grid World 환경)

import gym

from gym import spaces

import numpy as np

class GridWorldEnv(gym.Env):

metadata = {'render.modes': ['human']}

def __init__(self, grid_size=4):

super(GridWorldEnv, self).__init__()

self.grid_size = grid_size

self.observation_space = spaces.Discrete(grid_size * grid_size)

self.action_space = spaces.Discrete(4) # 0: up, 1: right, 2: down, 3: left

self.max_timesteps = 100

self.reward_range = (0, 1)

self.goal_position = grid_size * grid_size - 1 # 우측 하단

self.current_position = 0 # 좌측 상단

self.timestep = 0

def reset(self):

self.current_position = 0

self.timestep = 0

return self._get_obs()

def _get_obs(self):

return self.current_position

def step(self, action):

self.timestep += 1

if action == 0: # up

if self.current_position >= self.grid_size:

self.current_position -= self.grid_size

elif action == 1: # right

if (self.current_position % self.grid_size) < (self.grid_size - 1):

self.current_position += 1

elif action == 2: # down

if self.current_position < (self.grid_size * (self.grid_size - 1)):

self.current_position += self.grid_size

elif action == 3: # left

if (self.current_position % self.grid_size) > 0:

self.current_position -= 1

done = self.current_position == self.goal_position or self.timestep >= self.max_timesteps

reward = 1 if self.current_position == self.goal_position else 0

info = {}

return self._get_obs(), reward, done, info

def render(self, mode='human'):

grid = np.zeros((self.grid_size, self.grid_size))

grid[self.current_position // self.grid_size][self.current_position % self.grid_size] = 1

grid[self.goal_position // self.grid_size][self.goal_position % self.grid_size] = 2

print(grid)

def close(self):

pass

# Example Usage

env = GridWorldEnv()

observation = env.reset()

for _ in range(10):

action = env.action_space.sample()

observation, reward, done, info = env.step(action)

env.render()

if done:

observation = env.reset()

env.close()꿀팁: Custom Environment를 만들 때는, 환경의 상태, 액션, 보상을 명확하게 정의하는 것이 중요해요. ✍️

Distributed Training: 슈퍼컴퓨터 부럽지 않다! 💻

강화 학습은 학습에 많은 시간이 소요될 수 있어요. ⏰ 이럴 때는 Distributed Training을 활용하면, 여러 대의 컴퓨터를 사용하여 학습 시간을 단축시킬 수 있답니다! 🚀

Distributed Training 방법

- TensorFlow의 Distributed Training API를 사용하세요.

- 여러 대의 컴퓨터에 TensorFlow를 설치하고, 클러스터를 구성하세요.

- 학습 데이터를 분산시키고, 각 컴퓨터에서 독립적으로 학습을 진행하세요.

- 학습 결과를 모아서, 에이전트의 성능을 향상시키세요.

꿀팁: TensorFlow Agents는 Distributed Training을 위한 다양한 도구를 제공해요. 🛠️ TensorFlow Agents 문서를 참고하여, Distributed Training 환경을 구축해보세요.

강화 학습, 어디에 써먹을까? 🤔

강화 학습은 정말 다양한 분야에서 활용되고 있어요. 몇 가지 사례를 소개해드릴게요!

- 게임: AlphaGo, AlphaZero처럼, 강화 학습으로 학습된 AI는 인간을 뛰어넘는 실력을 보여주고 있어요. 😲

- 로봇: 로봇 팔 제어, 자율 주행 로봇 등, 강화 학습은 로봇의 움직임을 더욱 정교하게 만들어줘요. 🤖

- 자율 주행: 자율 주행 자동차는 강화 학습을 통해 스스로 운전하는 방법을 배우고 있어요. 🚗

- 추천 시스템: YouTube, Netflix 등, 강화 학습은 사용자에게 최적의 콘텐츠를 추천해주는 데 활용되고 있어요. 📺

- 금융: 주식 거래, 포트폴리오 관리 등, 강화 학습은 금융 분야에서도 혁신을 일으키고 있어요. 📈

더 깊은 강학습 기술 탐구를 위한 추가 주제 5가지 📚

강화 학습 알고리즘 파헤치기 🔍

DQN, PPO, A2C… 강화 학습 알고리즘 종류가 너무 많아서 헷갈리시나요? 🤔 각 알고리즘의 특징과 장단점을 비교 분석하고, 나에게 맞는 알고리즘을 선택하는 방법을 알아봐요!

보상 함수 설계의 비밀 🗝️

보상 함수는 강화 학습의 핵심! 🔑 어떻게 보상 함수를 설계하느냐에 따라 에이전트의 학습 결과가 완전히 달라질 수 있어요. 효과적인 보상 함수 설계 방법을 배워보고, 나만의 보상 함수를 만들어봐요!

모방 학습 (Imitation Learning) 완전 정복 💯

전문가의 행동을 따라 하는 모방 학습! 흉내 내기만 하는 게 아니라, 스스로 학습 능력을 키울 수도 있다는 사실! 😮 모방 학습의 기본 원리와 다양한 활용 사례를 살펴보고, 나만의 모방 학습 모델을 만들어봐요!

멀티 에이전트 강화 학습 (Multi-Agent RL) 도전 ⚔️

혼자서는 어렵지만, 함께라면 가능하다! 여러 에이전트가 협력하거나 경쟁하면서 학습하는 멀티 에이전트 강화 학습! 🤝 복잡한 문제를 해결하고, 더욱 강력한 AI를 만드는 방법을 배워봐요!

강화 학습의 윤리적 문제와 해결 방안 ⚖️

AI도 윤리가 필요하다! 강화 학습 알고리즘이 사회에 미치는 영향과 윤리적 문제점을 살펴보고, 해결 방안을 모색해봐요. 🧐 책임감 있는 AI 개발자가 되는 방법을 함께 고민해봐요!

강화 학습 기술 글을 마치며… ✍️

자, 이렇게 해서 강화 학습 환경 구축 방법을 함께 알아봤어요! 🎉 OpenAI Gym과 TensorFlow Agents를 활용하면, 누구나 쉽고 재미있게 강화 학습을 시작할 수 있다는 사실! 잊지 마세요! 😉

물론 강화 학습은 아직 발전해야 할 부분이 많은 분야예요. 하지만 그만큼 무한한 가능성을 가지고 있다는 뜻이기도 하죠. 여러분도 강화 학습에 대한 꾸준한 관심과 노력으로, 미래를 바꿀 멋진 AI를 만들어보세요! 🌟

혹시 더 궁금한 점이 있다면 언제든지 댓글로 질문해주세요! 제가 아는 선에서 최대한 자세하게 답변해드릴게요. 😊 그럼 다음에 또 유익한 정보로 만나요! 👋

강화 학습 기술 관련 동영상

강화 학습 기술 관련 상품검색